Thangka Mural Super-Resolution Based on Nimble Convolution and Overlapping Window Transformer

Author(s):Ji, L., Wang, N., Chen, X., Zhang, X., Wang, Z., Yang, Y

Published In:Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15038. Springer, Singapore

Cite:Ji, L., Wang, N., Chen, X., Zhang, X., Wang, Z., Yang, Y. (2025). Thangka Mural Super-Resolution Based on Nimble Convolution and Overlapping Window Transformer. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15038. Springer, Singapore. https://doi.org/10.1007/978-981-97-8685-5_15

Thangka murals are important cultural heritages of Tibet, but most of the existing Thangka images are of low resolution. Thangka mural super-resolution reconstruction is very important for the protection of Tibetan cultural heritage. Transformer-based methods have shown impressive performance in Thangka image super-resolution. However, current Transformer-based methods still face two major challenges when addressing the super-resolution problem of Thangka images:

- The self-attention mechanism used in Transformer models does not have high sensitivity to slender and curved edge textures;

- The sliding window mechanism used in Transformer models cannot fully achieve cross-window information interaction.

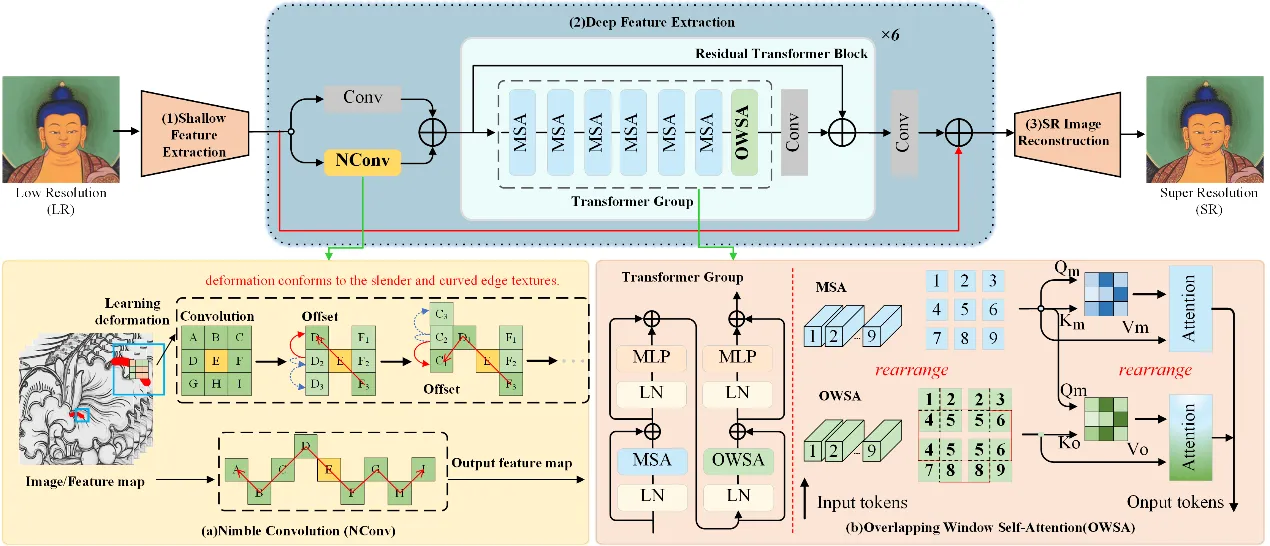

To resolve these problems, we propose a Thanka mural super-resolution reconstruction method based on Nimble Convolution (NConv) and Overlapping Window Self-Attention (OWSA). The proposed method consists of three parts:

- a Nimble Convolution (NConv) to enhance the perception of slender and curved geometric structures;

- an Overlapping Window Self-Attention (OWSA) to facilitate a more direct feature interaction among adjacent windows within the Transformer.

- for the first time, we construct a Thangka image super-resolution dataset, which contains 8,868 pairs of 512 images. We expect this dataset to serve as a valuable reference for future research. Both objective and subjective evaluations validated the competitive performance of our method.